Artificial Intelligence (AI) is revolutionizing industries, enhancing decision-making, automating processes, and driving innovation. From healthcare to finance, its applications are transformative, but this widespread adoption introduces critical security challenges that cannot be ignored. Our latest white paper, AI Security, delves into these challenges and provides actionable solutions to secure AI systems, ensuring trust, resilience, and competitive advantage.

Challenges of AI Security

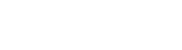

AI systems rely on sensitive data and complex models, making them attractive targets for malicious attacks. Key risks include:

- Data privacy concerns: sensitive training and inference data can be exposed or misused, compromising user confidentiality and violating regulations.

- Adversarial attacks: manipulating input data to mislead AI models, with severe implications for sectors like healthcare, e-commerce, and autonomous vehicles.

- Model inversion attacks: exploiting AI systems to reverse-engineer sensitive information from training data, leading to breaches of confidentiality and ethical concerns, especially in areas like healthcare and law enforcement.

- Intellectual Property (IP) theft: AI models embody years of research and investment. Stolen models can erode competitive advantages and lead to financial losses.

- Model integrity risks: deployments in remote or untrusted environments make models vulnerable to tampering, potentially leading to failures in critical infrastructure.

Solutions for Securing AI

The white paper outlines state-of-the-art technologies to address these challenges, including:

- Homomorphic encryption: enables computations on encrypted data without decryption.

- Secure multi-party computation: allows collaborative computation on private data without sharing inputs.

- Differential privacy: protects individual data by adding statistical noise to datasets or computations

- Confidential Computing: protects data during processing using secure environments like Trusted Execution Environments (TEEs).

- Federated Learning: enables collaborative model training without sharing sensitive data, ensuring privacy.

- Remote Attestation: verifies the integrity and authenticity of AI systems in distributed environments.

- Adversarial Training: strengthens models against manipulation by exposing them to adversarial examples during training.

CYSEC’s Innovative Approach

At CYSEC, we are at the forefront of AI security with our solution, ARCA Trusted OS. This hardened operating system integrates seamlessly with cutting-edge security tools to ensure tamper-proof AI deployment in distributed systems, enable scalable and secure orchestration of AI workloads across cloud and edge environments and leverage Attested Launch Protocol to validate the integrity of AI models during operation.

Securing the Future of AI

AI’s potential is boundless, but its adoption must be paired with robust security measures. Our white paper offers a roadmap for organizations to protect their AI investments while navigating an evolving threat landscape.

Download the White Paper:

Discover how to build trust, resilience, and innovation in AI systems.